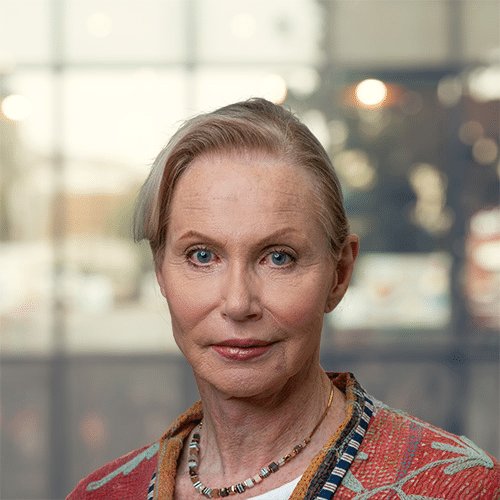

As artificial intelligence (AI) continues to evolve, its application in Norm Engineering (NE) holds immense promise. However, with this transformative power come significant ethical and practical challenges that must be addressed to ensure these systems serve society fairly and effectively. In a previous blog, we interviewed Lydia Meijer, Senior Strategist at TNO and former professor at the University of Amsterdam, to explore the concept of AI-enabled Norm Engineering. In this continuation, Lydia provides valuable insights into the ethical challenges of AI and how it can be responsibly leveraged in this rapidly developing field.

Understanding the ethical landscape

AI-enabled Norm Engineering, which uses AI to interpret, apply, and enforce legal norms, raises pressing ethical questions. The primary challenge is ensuring these systems operate fairly, transparently, and in alignment with societal values.

Bias and fairness in decision-making

AI systems rely on data to learn, and when this data is biased, it can lead to unfair outcomes. For NE, this could mean unequal access to rights or services, potentially reinforcing systemic inequities. Addressing these biases is essential to prevent any damage.

Identifying and mitigating biases in legal datasets is complex. These datasets often reflect historical inequities, and correcting them requires technical expertise and a deep understanding of social justice principles. Regular reviews and updates are necessary to detect and correct emerging biases that could undermine fairness.

Bias in NE systems extends beyond data. Algorithm design can unintentionally prioritize certain outcomes over others. Ensuring fairness requires a comprehensive approach: rigorous testing, diverse data representation, and collaboration between technologists and legal professionals.

Transparency and the black box problem

A major concern surrounding AI is the “black box” problem, where AI decision-making processes are ambiguous and difficult to understand. This lack of transparency is particularly problematic in NE, where decisions can have significant legal and societal consequences.

“It needs to be explainable,” Meijer insists. She advocates for systems that provide clear reasoning behind their decisions. For example, if a system denies an export request or social benefit application, it should explain the rationale in plain language. Transparency not only builds trust but also allows users to challenge decisions that seem incorrect or unfair.

Explainable AI techniques are becoming more popular to make decision-making processes more accessible. These tools describe decisions in human terms, fostering greater accountability. However, implementing explainability in complex regulatory systems is challenging and requires ongoing research and innovation.

Proportionality and subsidiarity in automated decisions

Another ethical challenge is ensuring AI-driven actions are proportional and subsidiary. In NE, proportionality means interventions must be appropriate to the scale of the issue, while subsidiarity ensures decisions are made at the most immediate or local level possible. For example, if an automated system shuts down telecommunications during an emergency, it must evaluate whether the action is justified and if less disruptive alternatives exist. Meijer stresses the need for such systems to “evaluate the consequences: is it legitimate? Is it proportional?” Without these safeguards, AI risks aggravating rather than solving problems. This principle applies to smaller-scale interactions as well, such as eligibility checks for government programs. Ensuring proportional AI decisions reduces the risk of unnecessary intrusions while maintaining compliance. Subsidiarity emphasizes empowering local stakeholders to address issues directly rather than relying on distant, generalized decision-making processes.Practical barriers to implementing norm engineering

In addition to ethical concerns, implementing AI in Norm Engineering comes with practical hurdles. These challenges stem from the complexity of legal systems and the necessity of human-AI collaboration.- Inconsistencies in law interpretation Legal norms are often subject to interpretation, and different developers or systems may apply them inconsistently. Such disparities can undermine trust in automated systems. Meijer observes, “If two developers interpret the law differently, you end up with systems that treat people differently based on which developer wrote the code.” NE aims to standardize norm application, but achieving this requires careful design and validation. Collaborative coding approaches, where developers work alongside legal experts, can help ensure accurate interpretations of complex regulations. Additionally, shared repositories of validated legal interpretations can standardize implementation across systems. Inconsistencies also arise when laws overlap or conflict, particularly in international contexts. Resolving these discrepancies demands advanced algorithms capable of recognizing and reconciling conflicts to ensure uniform application across diverse legal frameworks.

- Training AI on complex and evolving legal frameworks AI systems require large amounts of data to function, but legal systems are complex and constantly evolving. Keeping AI up to date with the latest laws and regulations is challenging, especially when rapid updates are needed for compliance. Generative AI can help speed up the process of interpreting and applying laws, but it must be integrated with human oversight to ensure accuracy. AI must also be trained to understand the interconnectedness of laws across jurisdictions, such as international trade regulations that overlap with national laws. Continuous updates to datasets and algorithms are crucial for accuracy. Collaboration between AI developers and legal institutions is essential for timely updates, incorporating new laws, and reflecting changes in legal interpretations.

Building Trust Through Strong Safeguards

Trust is the cornerstone of successful AI implementation, particularly in sensitive areas like Norm Engineering. To build trust, systems must include robust safeguards to prevent misuse and ensure accountability.- Ensuring human oversight While AI can manage the heavy lifting in NE, human oversight remains indispensable. Meijer underscores the importance of human involvement in critical decisions: “There must always be human oversight, especially in cases that are more nuanced or carry significant ethical implications.” Human involvement ensures decisions are not only technically correct but also ethically and socially acceptable.

- Rigorous testing and validation processes Rigorous testing ensures Norm Engineering systems function as intended. Key tests include stress-testing scenarios, validation of data inputs, and ethical compliance audits. These tests are crucial for identifying vulnerabilities and ensuring robustness in real-world applications. For example, test cases might simulate scenarios in environmental compliance systems, such as conflicting agency regulations or discrepancies in applicant information. Regular audits ensure systems remain effective as laws evolve. Validation processes should involve diverse stakeholders, including legal experts, technologists, and end-users, to capture a range of perspectives. Collaborative testing frameworks further enhance system reliability and adaptability.

- Establishing clear accountability mechanisms Clear accountability mechanisms are crucial. When AI systems make errors, processes must be in place to identify the cause, correct the issue, and assist affected parties. Robust documentation of decision-making enables investigators to trace errors to their source. This accountability protects users, builds public trust in AI systems, and ensures transparency. It also deters misuse and strengthens confidence in AI implementations.